|

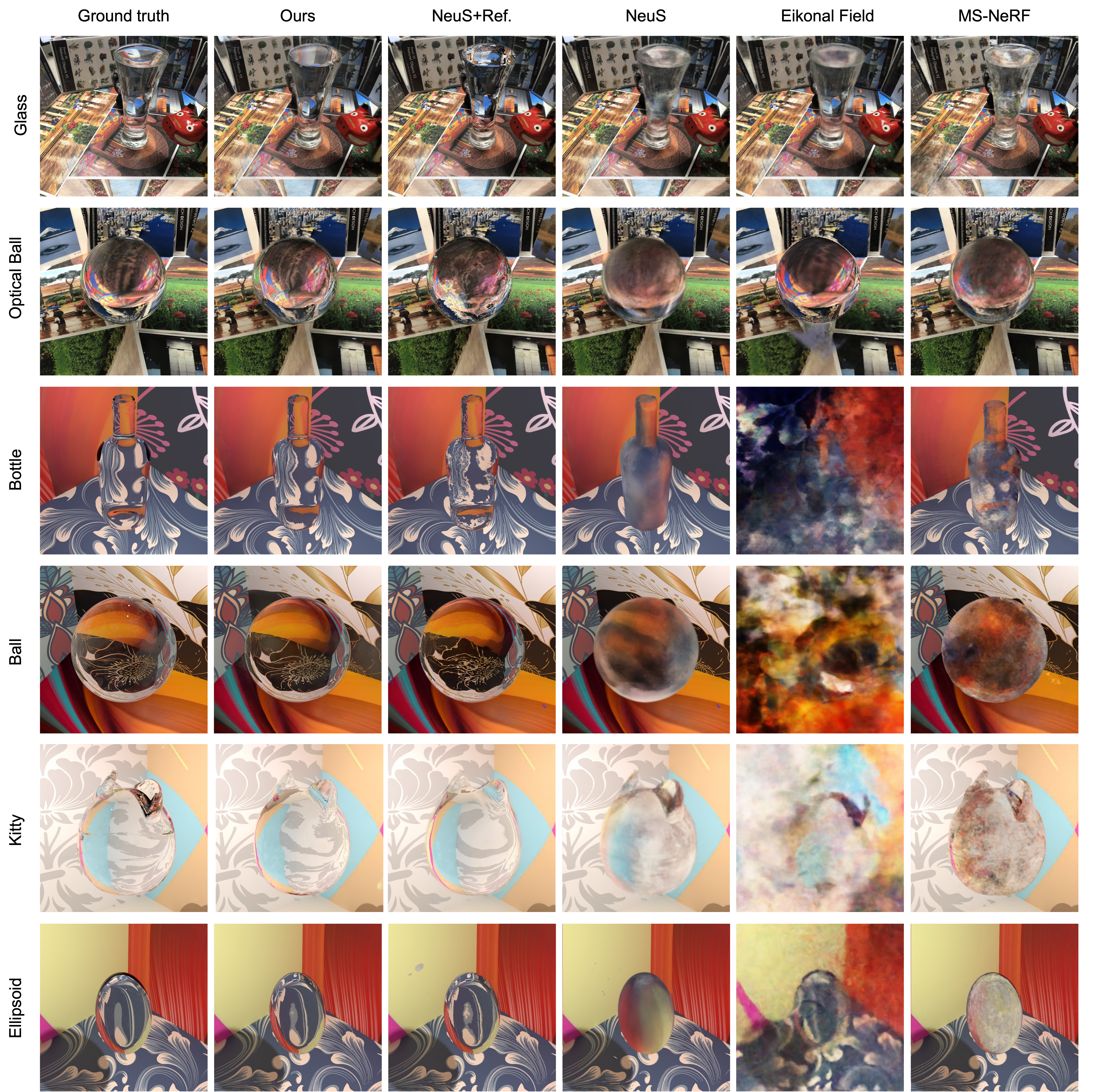

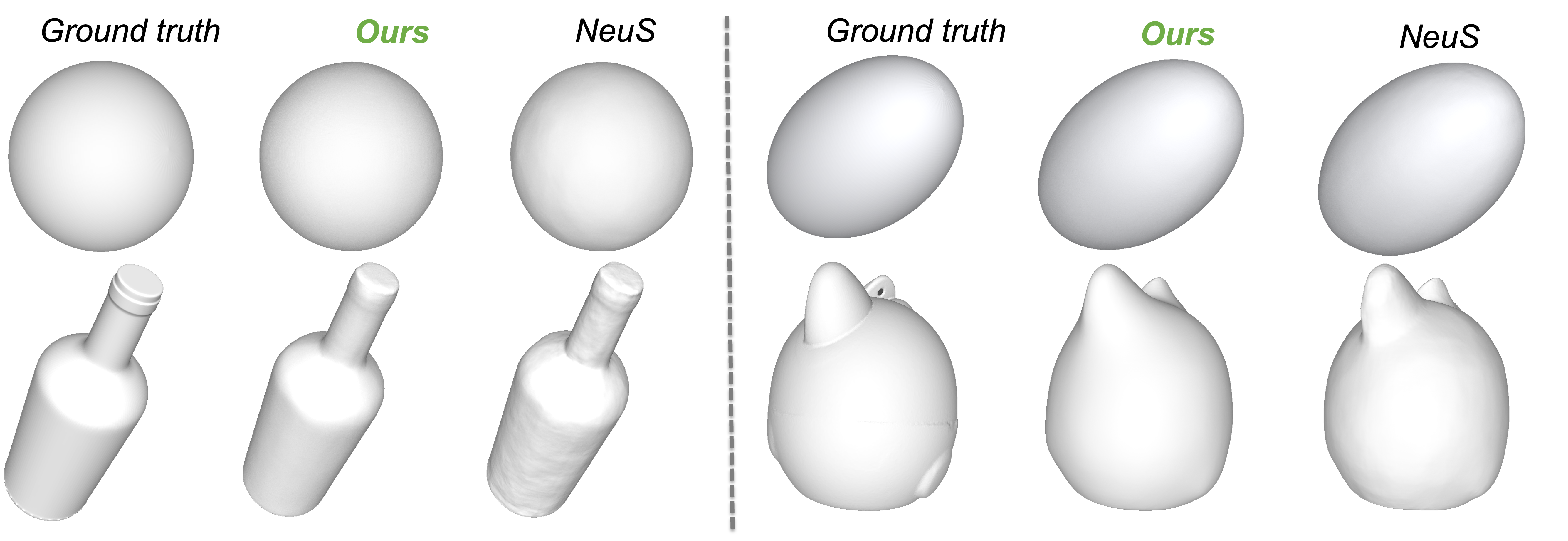

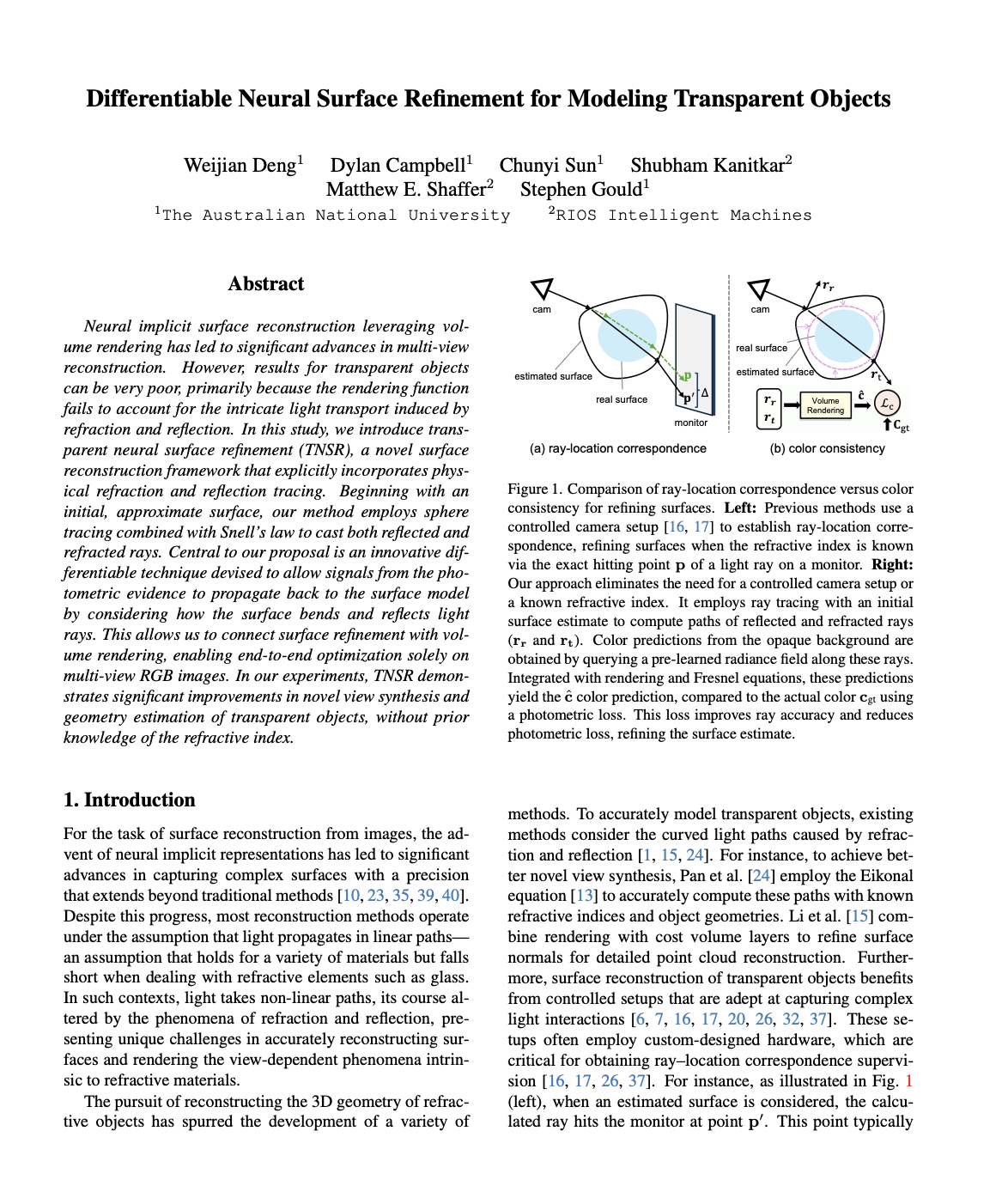

Neural implicit surface reconstruction leveraging volume rendering has led to significant advances in

multi-view reconstruction.

However, results for transparent objects can be very poor, primarily because the rendering function fails to

account for the intricate light transport induced by refraction and reflection.

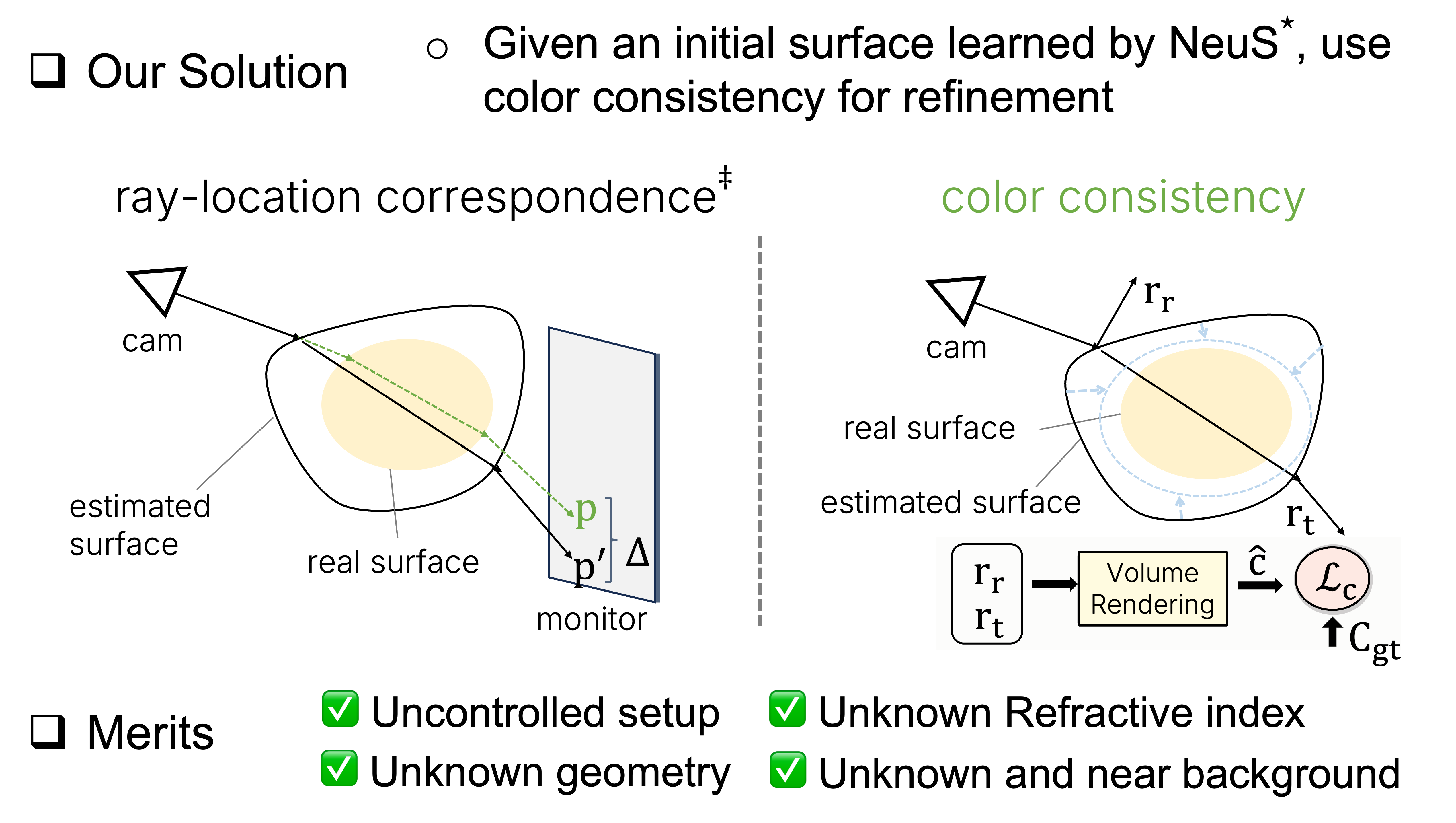

In this study, we introduce transparent neural surface refinement (TNSR), a novel surface reconstruction

framework that explicitly incorporates physical refraction and reflection tracing.

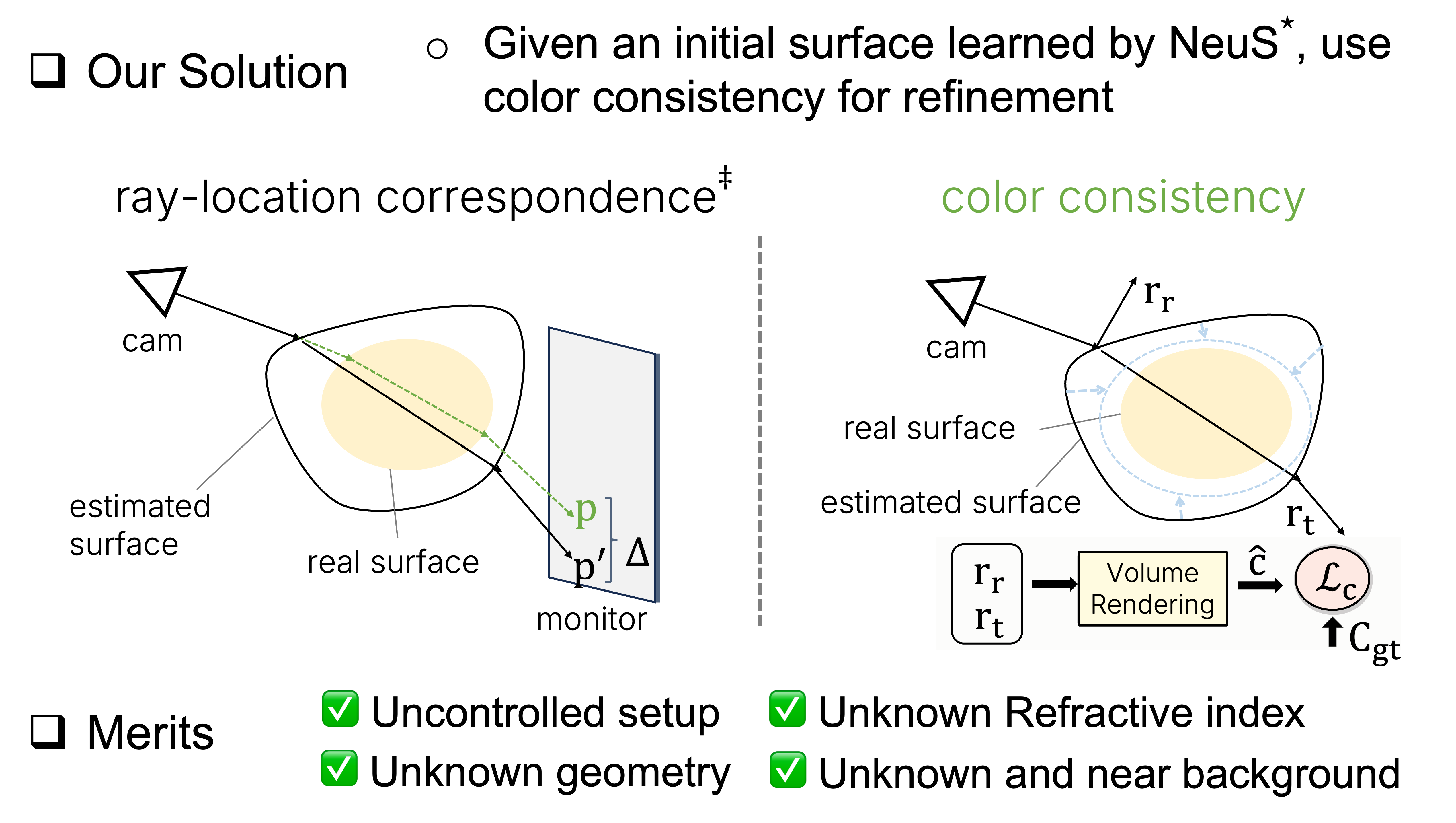

Beginning with an initial, approximate surface, our method employs sphere tracing combined with Snell's law

to cast both reflected and refracted rays.

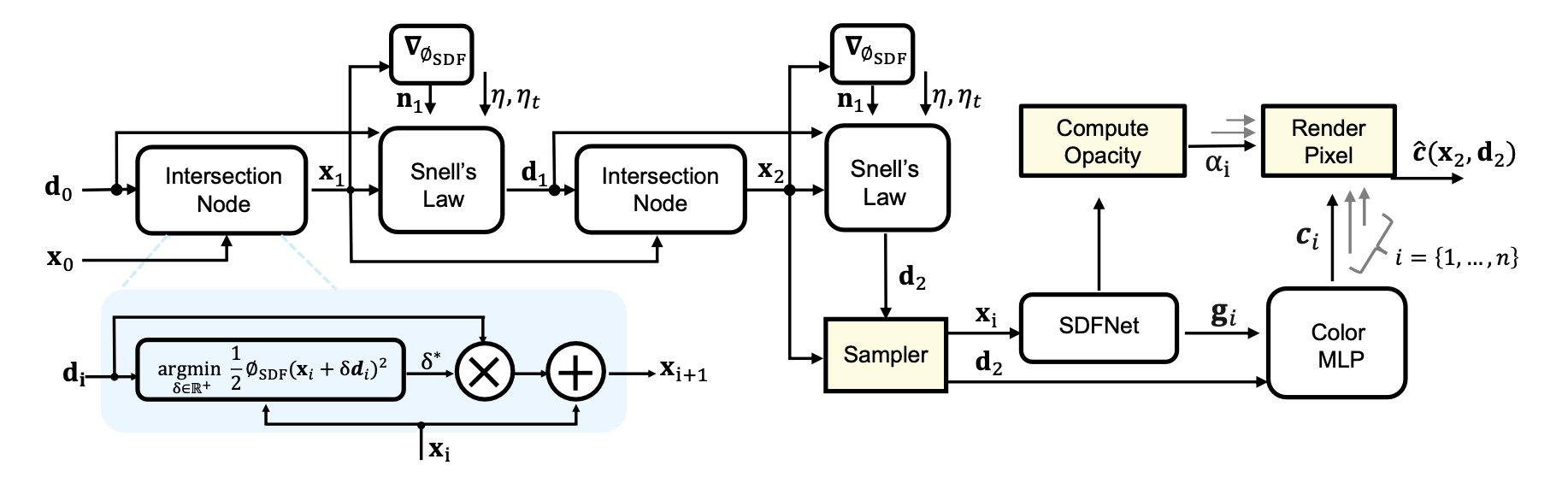

Central to our proposal is an innovative differentiable technique devised to allow signals from the

photometric evidence to propagate back to the surface model by considering how the surface bends and

reflects light rays.

This allows us to connect surface refinement with volume rendering, enabling end-to-end optimization solely

on multi-view RGB images.

In our experiments, TNSR demonstrates significant improvements in novel view synthesis and geometry

estimation of transparent objects, without prior knowledge of the refractive index.

|